Supervision Theory: A Horseshit-Free Guide to Determining the Value of AI

Exposing the limitations and opportunities of AI through the application of a simple framework.

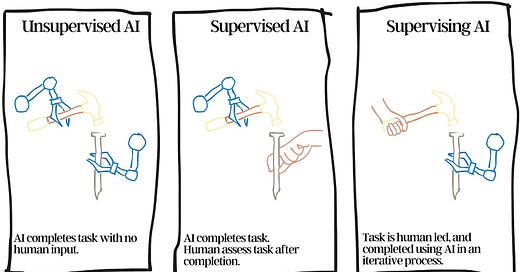

Supervision theory is a framework for better understanding and categorising an AI product based on how humans interact with it.

It will help you quickly determine if a claim has value, or is fugazi.

It should inform the raging but reductive debate as to whether AI is a bubble or actually the “4th industrial revolution”.

Tesla recently rolled out “Full Self Driving (Supervised)”, and the feedback is pretty positive. For example, this woman’s car only tried to kill her once in 4 hours:

This roll out is significant, because it takes a new approach to training cars to drive autonomously than both the previous Tesla system, and their competitors, like Waymo and Cruise.

The “old way” to do autonomous driving is deterministic. Waymo, for example, uses “AI” to recognise and categorise objects, such as other cars or stop signs. It predicts the movement of other objects. It uses this info to make specific predefined actions. E.g.

If {object} appears in front of vehicle

And vehicle is moving too fast for braking to avoid {object}

Then swerve.

This approach requires a predetermined response to basically any situation that could arise. This leads to “edge cases” where a paper bag on the road plus a particularly bright moon can make an autonomous car completely freak out and start driving in circles and/or sacrifice itself into a wall.

Tesla realised that this is not “it”.

Instead of humans coding specific actions for the cars to take in specific circumstances, Tesla made the call to use “supervised imitation learning”. Teslas are equipped with cameras that record how their legion of drivers react and handle various driving situations. This vision is uploaded to a neural network to create a model. The car uses this model to make its best guess at how a human would most likely react to various situations, such as a light that goes from green to orange, a curve in the road, an object pushing a pram, or a big beautiful round thing in the sky.

You don’t have to consider and write code for every potential situation, you just need to have caught shitloads of situations on camera.

It gives Tesla a huge advantage because they’re really the only ones with a fleet of millions of internet connected cars, many of which are owned by sycophants who will happily agree to upload their video data to daddy Elon, as opposed to, say Waymo, with their 100 cars on the road.

I have always been very public about my scepticism around the viability of autonomous cars, but I admit this seems like a promising path towards a future where your vehicle only tries to kill you infrequently.

Right now your Tesla in Full Self Driving (Supervised) might only try to mash you into oncoming traffic once every 4 hours, but there is a potential world where your car may only take you and all your passengers out every 6 months or so.

If your car might kill you every 6 months when in Full Self Driving mode then you have to be prepared to grab the wheel and slam on the brakes the second your car so much as twitches.

So you have to supervise the car.

Which begs the question: if an autonomous car can’t be unsupervised, what is the point? You can’t take your eyes off the road, your hands can’t be far from the wheel, and your feet can’t be far from the brake pedal…why bother at all? Driving a car is second nature to most people, our body is largely in an “autopilot” of its own, but all of a sudden “autonomy” would turn driving into a slightly tense situation of high vigilance.

Almost all modern cars currently have parking sensors that beep as I get closer to the car behind me and lights on my mirrors warn of cars in the lane next to me. Lane assist and emergency brake are increasingly popular. This technology augments my driving, filling my blind spots.

The car is supervising me.

There are three different human/car relationships described above:

Unsupervised Car: The dream of a fully autonomous car without a steering wheel or pedals where you can sleep.

Supervised Car: The car leads the behaviour, an attentive human steps in when required (e.g. to stop it crashing into a wall).

Supervising Car: The human leads the behaviour, car technology assists, making ongoing suggestions, and augments the human.

In the Supervision Model, “AI” replaces “car”, but the relationship to the human is the same. This model is useful as a framing device to quickly assess the viability of a product or business. Broadly speaking, my contention is this:

Unsupervised AI - Only useful in specific low stakes contexts, despite getting all the focus from both salivating AI evangelists and edgy SubStack cynics alike. Largely a red herring.

Supervised AI - Either useless or commodified, particularly for generative AI.

Supervising AI - Boring, but likely where all the value lies.1

Two of the most proliferant takes regarding the future of AI are that:

A: AI can’t be unsupervised therefore it is bust or alternatively;

B: AI getting things occasionally wrong will be fixed and therefore all white collar jobs are gone.

The Supervision framework proves both takes to be naive and rudimentary.

Unsupervised AI

Leaving your bots unattended

Unsupervised AI is an AI that performs tasks that affect the real world with no human oversight or intervention. It is good for customised low stakes tasks. Horrible use case for LLMs.

An Unsupervised AI interacts at scale with people without a human in the loop of the interaction, either through;

A service where humans interact directly with the raw output of an AI, like an AI doctor or Uber assigning a ride to a driver.

Content distribution, like directly publishing images, code, text or videos to a website, newsfeed or reader app like Netflix;

A series of discrete tasks that form a whole, sometimes referred to as “agents”, like building and running an affiliate marketing business.

Unsupervised AI is AI unleashed. There are no humans in the chain from generation to distribution.

Unsupervised AI is not “generate me a report” that you then read, edit, format, tweak then distribute and present. It’s not even “generate me a report” that you read and then send unedited. An Unsupervised AI would generate daily reports, drawing conclusions from some input data and send them to the relevant people without a human eye seeing them first. This is a legitimate, if not particularly valuable use case for Unsupervised AI.

The fact that AI is probabilistic means it has a fundamental flaw.

Traditionally, computers are deterministic, in that if you say to them 2 + 2 = they will add the number 2 and the number 2 together and return 4.

AI is probabilistic in that if you say “2 + 2 =” to, for example, ChatGPT, it will work out which series of characters, words or phrases is probably going to come next, and will probably determine that the next character should probably be “4”. However, in its trove of training data could be a bunch of maths quizzes, so the next character could also be “?”. So it could return “2 + 2 = ?”.2

No matter how much compute we throw at training the models, AI will likely always potentially make mistakes. It’s just making predictions after all. This may be an intractable problem. Ergo, there is too much risk in letting Unsupervised AI loose on anything above tasks with the lowest of stakes.

Much discourse around the ‘AI Bubble’ ends here, claiming that due to this fact, AI can never add enough value to justify the large associated costs. This is a simplistic analysis that fails to consider the basic interaction paradigm of the technology.

Unsupervised AI is really good at low stakes.

Where Unsupervised AI actually works is when its mistakes don’t really matter.

Unsupervised AI is used at scale all the time.

Tiktok uses AI to determine the optimal next video in your feed to keep you engaged.

Uber uses AI to determine the closest passenger to a driver who is finishing a trip.

Amazon uses AI to determine where a new inventory item should be stored to facilitate quickest fulfilment.

Apple uses AI to catagorise images you have taken by identifying their contents.3

In all instances the AI is probably getting it “wrong” regularly. But in this case “wrong” basically means “not perfect”. It doesn’t really matter if Tiktok could have actually served you something that would have kept you slightly more engaged, nor if it would have actually been 1 minute quicker for an Uber driver to pick up passenger B rather than passenger A. I’m sure Amazon would hate an item taking 30 seconds longer than it could have due to not 100% optimised stock placement, but they’re not going to lose a customer over it, nor will Apple if it categorises a baby tiger as a cat. On aggregate, being close to right enough of the time creates tremendous value for the businesses and there is little downside risk.

As opposed to letting an unsupervised LLM go wild on your customers.

The above are not examples of Large Language Models (LLMs). An LLM is trained using a corpus of human language, to answer questions and complete thoughts, e.g. ChatGPT. The above are also not diffusion models like those used to generate images, videos or audio. LLMs and diffusion models using transformer technology is where all the froth is in the AI hype right now, but LLMs are particularly unsuited to generating value in Unsupervised use cases.

I say “generating value” because Unsupervised LLMs are currently being plugged into Wordpress APIs and being used to flood the internet with mass generated “top 5” lists, how-tos and affiliate marketing articles. There is no doubt the same will eventually happen with AI videos flooding social media with generic news updates, trippy kids videos and other assorted weirdness that creates little value, existing purely to scrape minute amounts of advertising rev-share.

But there is value in incorporating the ability for film editors to, for example, quickly flick through different colours or designs of someone’s dress. Re-do a line of dialogue in post. Try various different lightings in a scene. Generate pitches. Storyboard ideas. Create elements of a background. The technology will be used under the careful eyes of creative people to increase productive capacity and cut costs. This is more boring. This is Supervising AI.

As such, when assessing the value and viability of a product that fits within the Unsupervised AI paradigm, the first question should be “how low are the stakes?”. If the answer is “getting something wrong would be bad” then the product or hype is probably worth ignoring.

Supervised AI

1000 Indians.

Amazon recently announced they were scaling back the ambitions of their “Just Walk Out” technology. JWO enabled shoppers in supermarkets to grab a bunch of items, and “just walk out” of the store. Camera and RFID chips would use AI visual recognition systems to identify the goods and charge a customer’s card.

It was widely reported that Amazon’s Just Walk Out technological innovation was “actually 1000 Indians”

A lot of the reporting and punditry misrepresented exactly what was going on here, implying that this was not AI, but contractors in India watching you through the camera and manually putting through orders.

The truth is something more akin to AI having a crack at working everything out, and if unsure, a human checks the AI’s work, which also helps to continue training the models (AKA reinforcement learning).

Amazon has determined that in the case of multi-item supermarket style purchases, the tech doesn’t work well enough and isn't getting better, with 7/10 orders having to be checked. They will now focus on retail environments with smaller average order sizes, such as football stadiums and airport shops.

This is an example of Supervised AI.

A Supervised AI takes the lead role to fulfil a discrete goal vaguely stated by a human. It makes decisions that can not be built on, but instead typically has to be reversed, removed or repaired after the fact.

Examples include:

“Book me a European holiday”

“Write me a calorie counting app”

“Debug this code”

“Write a blog post about financial planning.”

“Make me a Marville-style movie but with Russel Brand as Basedman taking on woke”

AI, take me on a Holiday

Booking holidays seems to be a recurring theme of AI product demos. I don’t know why. In the Rabbit R1 product launch, Founder Jesse Jyu demonstrates:

“I want to take my family to London, there will be two of us and a child aged 12, I'm thinking of January 30 to February 5th, can you plan the whole trip for me with cheap non-stop flights, grouped seats, a cool SUV, and a nice hotel that has wifi….so it’s all been planned out, I just confirm, confirm, confirm, and that’s it.”

I don’t know how useful this is. There are too many elements that will require amending, tweaking, and researching. A better way to do it would be in an iterative step by step process that mirrors what humans currently actually do. That is, prompting AI to make suggestions about various activities, refining ideas in a much more human led approach - supervising AI.

This would look more like:

Me: Help me put together a holiday for me and my family. What are some child friendly areas in London that still have good restaurants?

AI: I would suggest Chelsea for a traditional London experience, South Kensington for proximity to Museums, or East London for street art and diverse cuisine.

Me: Tell me more about East London, and recommend some places to stay there.

etc.

Once established, directing an AI “agent” to then make the specific bookings would be a valuable feature.

A Supervising AI assists the human with the human parts of the planning process that require curiosity, subjectivity, adventure, excitement. A Supervised AI executes the button clicking and data entry.

I am suspicious of any business that claims to be solving “inconveniences” that are attached to fundamentally human instincts.

This is not to pick on Rabbit. FWIW I pre-ordered one. I think they look cool.

AI, write me an infinite money making app

"You probably recall over the course of the last 10, 15 years almost everybody who sits on a stage like this would tell you it is vital that children learn computer science, now, it's almost the complete opposite." - Jensen Wang, CEO, Nvidia

Reports on the death of the developer were an exaggeration.

The Supervised AI coder idea is to write a prompt for an AI to build you an app or website that will just work. Ignoring the challenges of signing up to the various services and APIs, unfortunately, getting probabilistic code to write deterministic code is a recipe for immense amounts of frustration.

Ask any dev about debugging someone else’s code. Dumping a whole heap of code, pressing run, then having to painstakingly pull it apart and understand it retrospectively is less efficient than just writing it in the first place. And the most efficient way to write it is with supervising AI assisting you in an iterative process.

To flip the example, the debugging aspect of Supervised AI has some value. Sending your app’s code to an LLM and saying “find me some common bugs” is a discrete supervised task that could be helpful. Big data processing, “needle in a haystack” queries is where Supervised AI shines. However this has already been largely commodified, is biassed towards hyperscale organisations that can afford immense amounts of compute, and should realistically be a feature of every major IDE and cloud service provider, as well as LLM. Not a lot of money on the table.

AI, write me an article about how all AIs fit into a Unsupervised/Supervised and Supervising Trichotomy.

To stick with LLMs, the difference between a Supervised AI blog post and a Supervising AI blog post is whether it is human lead. Having a Supervised AI generate content around a broad topic typically leads to something too generic to create value, or something that requires so much editing and refinement that it is not worth the hassle.

The alternative is a human led approach, that might look like a prompt that includes:

the outline of what you want to write

related documents

related statistics or numbers

quotes

a point or view or perspective that you want to reach

Then amending, tweaking, revising, rewriting, formatting - this is Supervising AI.

On this vein, I am deeply suss of any of the many video generators where the user types some prompts, presses go, and then hopes the output is good. Even if the technology were to exist that allows a user to prompt each scene at a time, or even shot by shot, I am sceptical that the generated wheel of fortune that is Supervised AI is the optimal way to convey meaning, or create something good.

Like Unsupervised AI, Supervised AI only really works in situations with greatly more confined parameters, and lower stakes. Using the travel planning example, it may work for business trips where you want to fly in and fly out with minimal interactions with the booked services, or regular trips where you stay at the same hotel place and fly the same airline. In which case there is limited need for particularly sophisticated AI, if any AI at all.

Supervising AI

Table for 1

I recently attended the Sydney Hilton for a breakfast event pitched ostensibly as a “director briefing” on AI Transformation. The speaker was Microsoft’s EVP and Chief Commercial Officer Judson Althoff.

Althoff wandered back and forth across the stage wearing a Britney Spears headset microphone to address the room full of company directors. He was giving American. He had the delivery and the polish you would expect of a man who was making the same presentation in 4 different cities in 3 days.

It’s easy to be cynical of these events, but good directors are typically cynical people, especially when it comes to technology investment. They have been burnt before by cost and time overruns of technology uplifts, and by the unfulfilled promises of digital transformation. So while Althoff certainly used the opportunity to regularly mention various Microsoft AI products and services, being a pro and knowing the audience, he was never going to go full AI will replace 80% of your employees in the next few years.

Altoff’s pitch was on the much more tangible promise of using natural language to query internal company data and documents from the comfort of your own Microsoft Teams window.

The primary example he gave of the benefits of AI integration was how Microsoft was providing suggested responses to their customer service agents. Instead of manually typing responses or searching for help desk articles, generative AI would provide them with a suggested response that referenced the appropriate article. If the generated answer wasn’t correct it could be edited or ignored for the human to do human things.

Althoff then sat down on an on stage lounge chair and had a scripted interview with Telstra’s Kim Krogh Andersen, their Group Executive for Product & Technology. He regaled the audience with how Telstra was already implementing AI by…also by providing customer service agents with suggested responses.

While not particularly creative, there are undeniably “efficiencies” to be gained here.

This is Supervising AI. The AI parses the text in the chat room, providing suggestions to the agent, augmenting their ability to quickly respond to customer queries. The agent can edit and amend the copy. Ultimately the agent has the agency.

The claims made by Microsoft were that generative AI led to somewhere in the realms of 60-80% quicker resolutions, which implies that you may well be able to get by with 60-80% fewer customer service representatives. The neural nets will only get better the more they are trained, so one day we may be able to fire even more entry level employees, or at least your contractor will.

Even when adding a reality and cynicism tax, plus factoring in additional ongoing technology expenses there is likely a cost saving for organisations of something like 50% with very little downside or risk.

Global annual spend on text based customer service is around $36b, therefore efficiencies from Supporting AI are real and can be significant.4

This is where generative AI flourishes. The human and the AI work hand in hand, going back and forth, iterating on each other's output. The human is more efficient, and there is not a lot of rope to allow the AI to go bonkers. The output is more human, and therefore adds more value.

It would be remise of any business with a customer help desk to not use these technologies. Much like it would be remiss of organisations not to have some kind of coding “co-pilot” to make coding more efficient, remove the “grunt work”.

Microsoft really nailed the branding here, and evidently came to the same conclusion that Supervising AI, an AI Co-pilot, is where the majority of tangible value will be created.

“Marginal increased productivity” is not as sexy as “AI replaces job of humans”, even though it nets out to the same thing.

So where does that leave us?

The discourse has constructed an ultimatum where the current swaths of investment in AI are only justified if there is a “killer app” of the size of Facebook or Google or the iPhone.

Supervising AI will create incremental efficiencies for tasks such as customer service, coding, research, copy, reporting and research. These efficiencies could easily be in the hundreds of billions per annum and are happening now.

Summary

Unsupervised AI

AI assesses information and takes actions in the world free from human interaction of oversight.

Broadly only useful when mistakes are accepted as constant and have largely imperceivable impact.

Supervised AI

The AI has a discrete goal and takes the lead in determining a path to achieving it.

Humans observe the behaviour or the outcome and step in to rectify, repair, undo, delete, turn it off or just bin it.

Unclear whether there is any value here other than to produce low quality spam. Often creates more work.

Supervising AI

A human led iterative approach to achieving a goal where the AI augments the human. Both work together, back and forth, until a goal is achieved.

Creates efficiencies, often with clear financial benefit.

If you made it this far, you should definitely listen to my podcast, Down Round with James Hennessey

ChatGPT could in fact call its code module or Wolfram Alpha and have the non-LLM run a deterministic process, but people know what ChatGPT is, so give me a break.

Not to be confused with Supervised Learning vs Unsupervised Learning in the context of Machine Learning.

Apple uses over 100 “machine learning” models like this on iOS.

This is FTX-tier financial modelling, so take it with a grain of salt, but directionally correct: According to Grand View Research, the global outsourced customer service market size was USD 92.93 billion in 2021.

Estimates vary significantly on the extent of outsourcing as anywhere from 30%-80%, so let’s say it's 50%, therefore, annually something like $180b is spent on customer service.

Oracle says 64% of customer service is text, and text is 25% the cost of a phone call.

So lets say, globally, text based annual customer service cost is $34b, therefore, based on the 50% efficiencies we estimated above, that’s a global saving of $17b annually.

This is easily the most cogent breakdown of this topic I've ever seen. I was originally pointed towards Down Round because a friend said you had the most reasonable AI takes (ie not completely dismissive and not snake oil salesmen), and this feels like such a perfect summation of that reasonable viewpoint. This post is definitely gonna be my go-to for sharing with skeptics and hype men alike. Thank you!